4. SEGMENTATION AND EDGE DETECTION

4.1 Region Operations

Discovering regions can be a very simple exercise, as illustrated in 4.1.1. However, more

often than not, regions are required that cover a substantial area of the scene rather than a

small group of pixels.

4.1.1 Crude edge detection

USE. To reconsider an image as a set of regions.

OPERATION. There is no operation involved here. The regions are simply identified as

containing pixels of the same gray level, the boundaries of the regions (contours) are at the

cracks between the pixels rather than at pixel positions.

Such as a region detection may give far for many regions to be useful (unless the number

of gray levels is relatively small). So a simple approach is to group pixels into ranges of

near values (quantizing or bunching). The ranges can be considering the image histogram

in order to identify good bunching for region purposes results in a merging of regions

based overall gray-level statistics rather than on gray levels of pixels that are

geographically near one another.

4.1.2 Region merging

It is often useful to do the rough gray-level split and then to perform some techniques on

the cracks between the regions – not to enhance edges but to identify when whole regions

are worth combining – thus reducing the number of regions from the crude region

detection above.

USE. Reduce number of regions, combining fragmented regions, determining which

regions are really part of the same area.

OPERATION. Let s be crack difference, i.e. the absolute difference in gray levels between

two adjacent (above, below, left, right) pixels. Then give the threshold value T, we can

identify, for each crack

<

=otherwise0,

Tsif1,

w

i.e. w is 1 if the crack is below the threshold (suggesting that the regions are likely to be

the same), or 0 if it is above the threshold.

Now measure the full length of the boundary of each of the region that meet at the crack.

These will be b1 and b2 respectively. Sum the w values that are along the length of the

crack between the regions and calculate:

( )

21 b,bmin

w

∑

If this is greater than a further threshold, deduce that the two regions should be joined.

Effectively this is taking the number of cracks that suggest that the regions should be

merged and dividing by the smallest region boundary. Of course a particularly irregular

shape may have a very long region boundary with a small area. In that case it may be

preferable to measure areas (count how many pixels there are in them).

Measuring both boundaries is better than dividing by the boundary length between two

regions as it takes into account the size of the regions involved. If one region is very small,

then it will be added to a larger region, whereas if both regions are large, then the evidence

for combining them has to be much stronger.

4.1.3 Region splitting

Just as it is possible to start from many regions and merge them into fewer, large regions.

It is also possible to consider the image as one region and split it into more and more

regions. One way of doing this is to examine the gray level histograms. If the image is in

color, better results can be obtained by the examination of the three color value

histograms.

USE. Subdivide sensibly an image or part of an image into regions of similar type.

OPERATION. Identify significant peaks in the gray-level histogram and look in the

valleys between the peaks for possible threshold values. Some peaks will be more

substantial than others: find splits between the "best" peaks first.

Regions are identified as containing gray-levels between the thresholds. With color

images, there are three histograms to choose from. The algorithm halts when no peak is

significant.

LIMITATION. This technique relies on the overall histogram giving good guidance as to

sensible regions. If the image is a chessboard, then the region splitting works nicely. If the

image is of 16 chessboard well spaced apart on a white background sheet, then instead of

identifying 17 regions, one for each chessboard and one for the background, it identifies

16 x 32 black squares, which is probably not what we wanted.

4.2 Basic Edge Detection

The edges of an image hold much information in that image. The edges tell where objects

are, their shape and size, and something about their texture. An edge is where the intensity

of an image moves from a low value to a high value or vice versa.

There are numerous applications for edge detection, which is often used for various

special effects. Digital artists use it to create dazzling image outlines. The output of an

edge detector can be added back to an original image to enhance the edges.

Edge detection is often the first step in image segmentation. Image segmentation, a field of

image analysis, is used to group pixels into regions to determine an image's composition.

A common example of image segmentation is the "magic wand" tool in photo editing

software. This tool allows the user to select a pixel in an image. The software then draws a

border around the pixels of similar value. The user may select a pixel in a sky region and

the magic wand would draw a border around the complete sky region in the image. The

user may then edit the color of the sky without worrying about altering the color of the

mountains or whatever else may be in the image.

Edge detection is also used in image registration. Image registration aligns two images that

may have been acquired at separate times or from different sensors.

roof edge line edge step edge ramp edge

Figure 4.1 Different edge profiles.

There is an infinite number of edge orientations, widths and shapes (Figure 4.1). Some

edges are straight while others are curved with varying radii. There are many edge

detection techniques to go with all these edges, each having its own strengths. Some edge

detectors may work well in one application and perform poorly in others. Sometimes it

takes experimentation to determine what is the best edge detection technique for an

application.

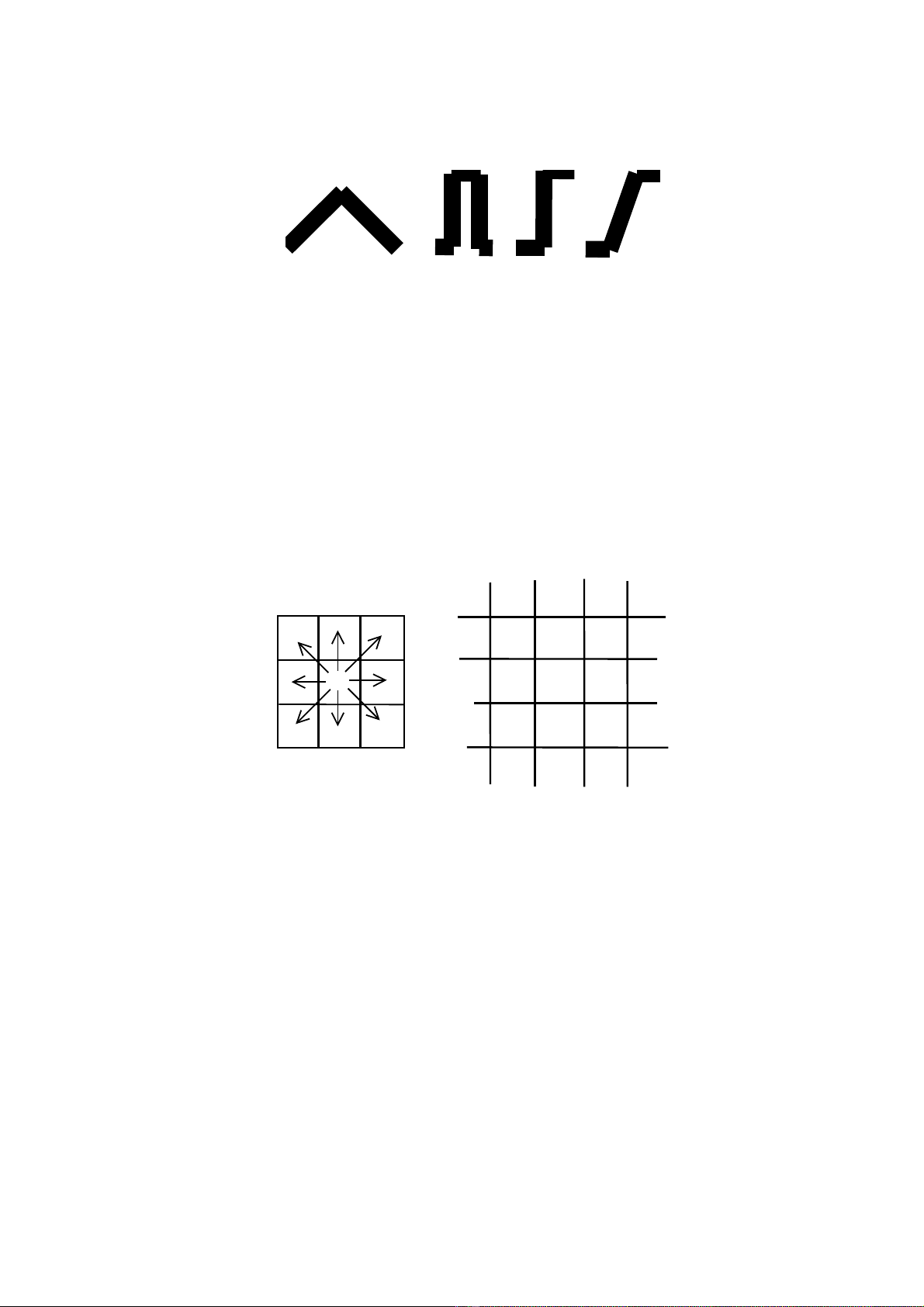

The simplest and quickest edge detectors determine the maximum value from a series of

pixel subtractions. The homogeneity operator subtracts each 8 surrounding pixels from the

center pixel of a 3 x 3 window as in Figure 4.2. The output of the operator is the maximum

of the absolute value of each difference.

homogenety operator image

11

11

11

11

11

12

16

16

13

15

new pixel = maximum{ 11−11 , 11−13 , 11−15 , 11−16 , 11−11 ,

11−16 , 11−12 , 11−11 } = 5

Figure 4.2 How the homogeneity operator works.

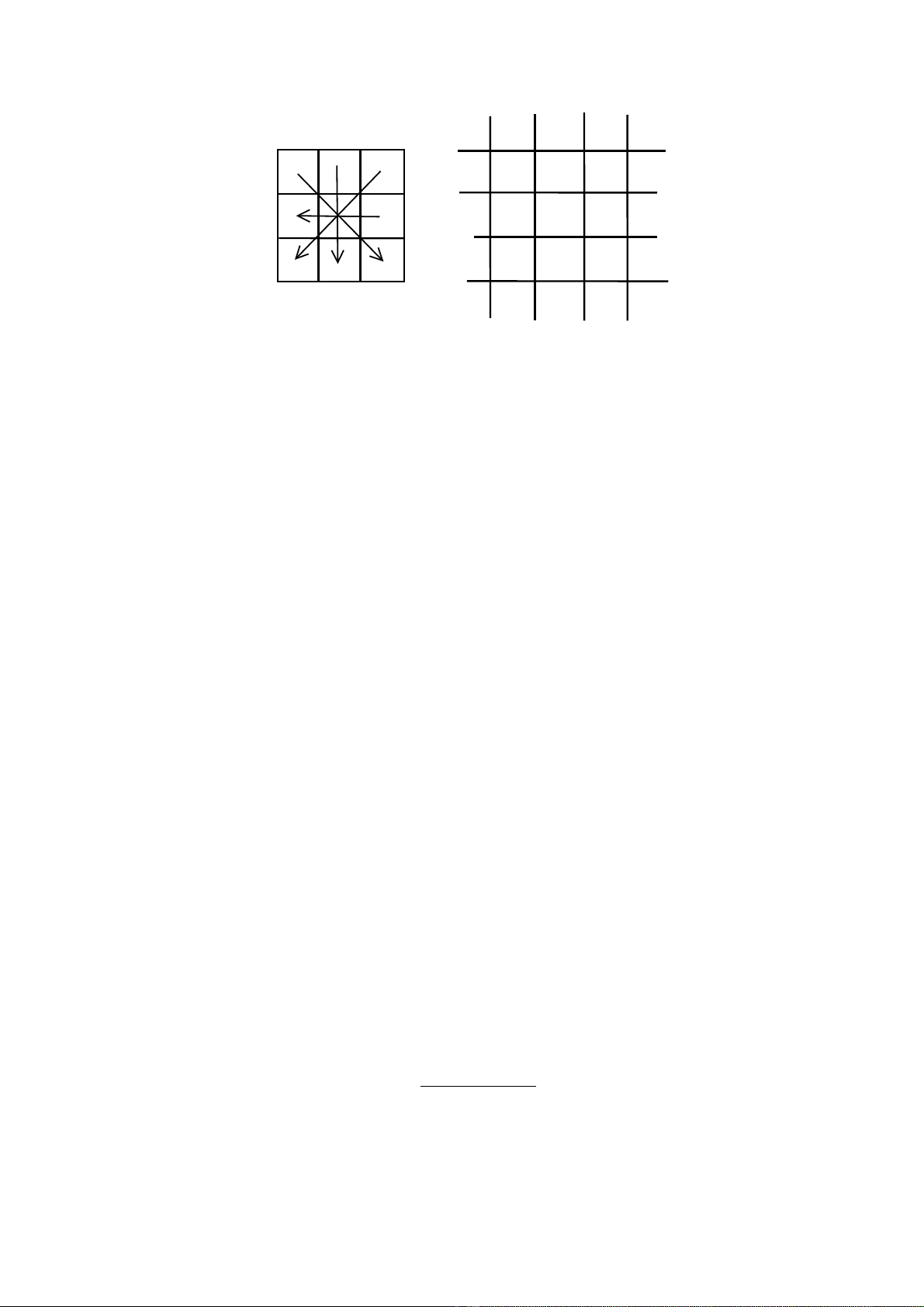

Similar to the homogeneity operator is the difference edge detector. It operates more

quickly because it requires four subtractions per pixel as opposed to the eight needed by

the homogeneity operator. The subtractions are upper left − lower right, middle left −

middle right, lower left − upper right, and top middle − bottom middle (Figure 4.3).

homogenety operator image

11

11

11

11

11

12

16

16

13

15

new pixel = maximum{ 11−11 , 13−12 , 15−16 , 11−16 } = 5

Figure 4.3 How the difference operator works.

4.2.1 First order derivative for edge detection

If we are looking for any horizontal edges it would seem sensible to calculate the

difference between one pixel value and the next pixel value, either up or down from the

first (called the crack difference), i.e. assuming top left origin

Hc = y_difference(x, y) = value(x, y) – value(x, y+1)

In effect this is equivalent to convolving the image with a 2 x 1 template

1

1

−

Likewise

Hr = X_difference(x, y) = value(x, y) – value(x – 1, y)

uses the template

–1 1

Hc and Hr are column and row detectors. Occasionally it is useful to plot both X_difference

and Y_difference, combining them to create the gradient magnitude (i.e. the strength of the

edge). Combining them by simply adding them could mean two edges canceling each

other out (one positive, one negative), so it is better to sum absolute values (ignoring the

sign) or sum the squares of them and then, possibly, take the square root of the result.

It is also to divide the Y_difference by the X_difference and identify a gradient direction

(the angle of the edge between the regions)

=−

y)ce(x,X_differen

y)ce(x,Y_differen

tanirectiongradient_d 1

The amplitude can be determine by computing the sum vector of Hc and Hr

)y,x(H)y,x(H)y,x(H 2

c

2

r+=

Sometimes for computational simplicity, the magnitude is computed as

)y,x(H)y,x(H)y,x(H cr +=

The edge orientation can be found by

( )

( )

y,xH

y,xH

tan

r

c

1−

=θ

In real image, the lines are rarely so well defined, more often the change between regions

is gradual and noisy.

The following image represents a typical read edge. A large template is needed to average

at the gradient over a number of pixels, rather than looking at two only

3444332100

2342334010

3333433100

3233430200

2420001000

3302000000

4.2.2 Sobel edge detection

The Sobel operator is more sensitive to diagonal edges than vertical and horizontal edges.

The Sobel 3 x 3 templates are normally given as

X-direction

121

000

121 −−−

Y-direction

101

202

101

−

−

−

Original image

3444332100

2342334010

3333433100

3233420200

2420001000

3302000000

absA + absB