Giới thiệu tài liệu

This lecture, presented by instructor Hoang Anh Viet, introduces the characteristics and properties of the Vietnamese language, along with various word segmentation methods. The content includes studies on Vietnamese by researchers both domestically and internationally, different approaches to language processing, and specific methods such as longest matching, transformation-based learning, and the use of statistical models.

Đối tượng sử dụng

Students need to have a solid understanding of computational theory, compilers, and probability statistics to understand and apply the methods presented.

Nội dung tóm tắt

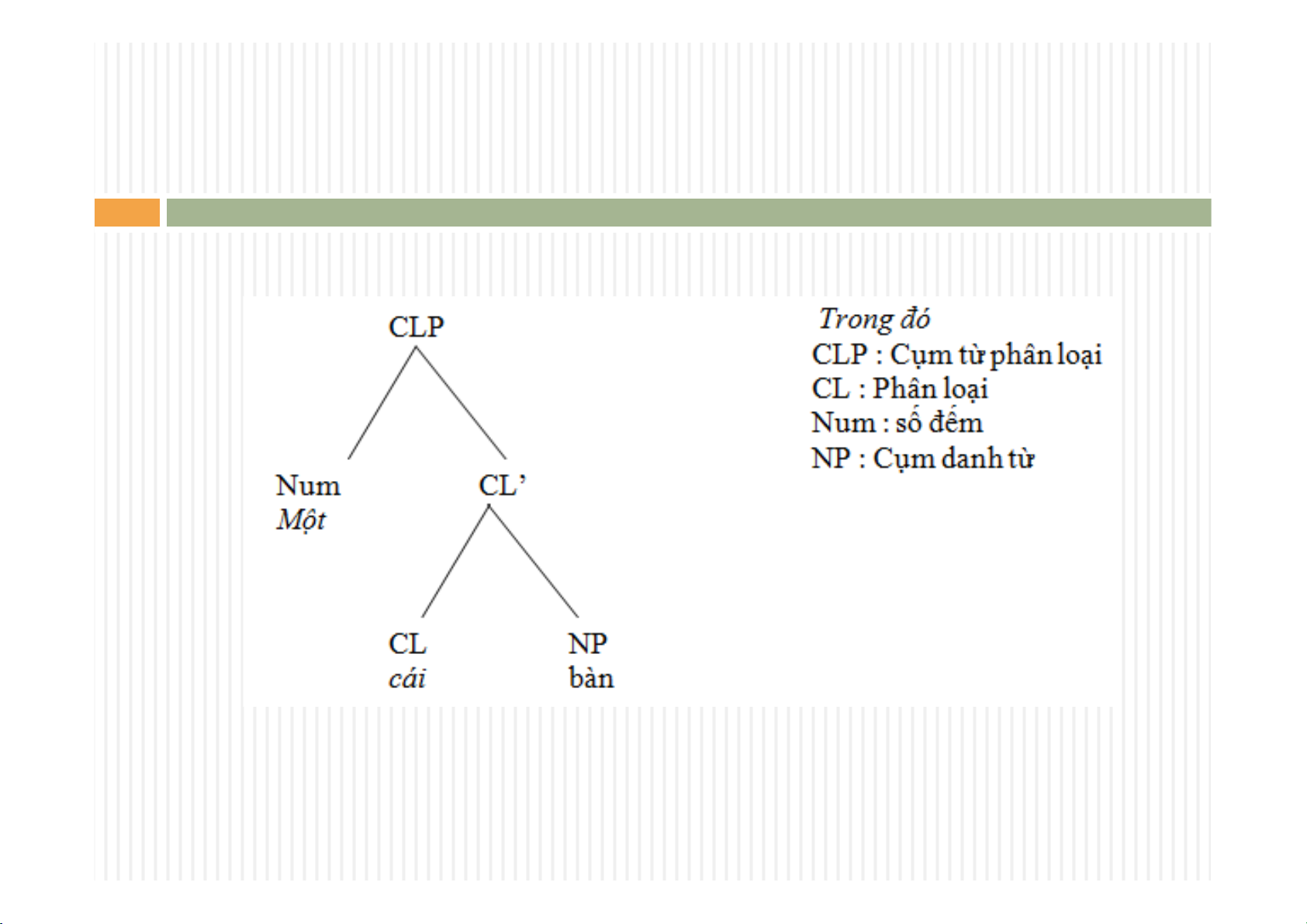

The lecture provides an overview of research related to the Vietnamese language, including works by Thompson (1965), Shum (1965), Beatty (1990), Nguyen Tai Can (1975), Ho Le (1992), and Diep Quang Ban (1999). Each author approaches the structure of Vietnamese noun phrases from different perspectives. For example, Thompson focuses on noun phrase structure, Shum describes the structure using formal rules, and Nguyen Tai Can analyzes noun phrases into three parts: head, center, and tail.

The lecture also mentions approaches to Vietnamese word segmentation, including dictionary-based methods, statistical methods, and hybrid methods. Specific methods include longest matching, transformation-based learning (TBL), weighted finite-state transducers (WFST), maximum entropy (ME), hidden Markov models (HMM), and support vector machines (SVM).

Furthermore, the lecture introduces the use of Automat in Vietnamese word segmentation, including building syllable and vocabulary automata, then using graphs to represent possible analyses. The graph method helps resolve ambiguities by finding the shortest paths on the graph.

The lecture also covers statistical methods, using document frequency (DF) from search engines to approximate the probability of a word appearing on the Internet, and measuring the association between words using mutual information (MI). Finally, the lecture introduces genetic algorithms, a global optimization method, to find the most reasonable word segmentation by evolving a population over many generations.