Trịnh Tấn Đạt

Khoa CNTT – Đại Học Sài Gòn

Email: trinhtandat@sgu.edu.vn

Website: https://sites.google.com/site/ttdat88/

Contents

Introduction: dimensionality reduction and feature selection

Dimensionality Reduction

Principal Component Analysis (PCA)

Fisher’s linear discriminant analysis (LDA)

Example: Eigenface

Feature Selection

Homework

Introduction

High-dimensional data often contain redundant features

reduce the accuracy of data classification algorithms

slow down the classification process

be a problem in storage and retrieval

hard to interpret (visualize)

Why we need dimensionality reduction???

To avoid “curse of dimensionality”

To reduce feature measurement cost

To reduce computational cost

https://en.wikipedia.org/wiki/Curse_of_dimensionality

Introduction

Dimensionality reduction is one of the most popular techniques to remove noisy (i.e., irrelevant)

and redundant features.

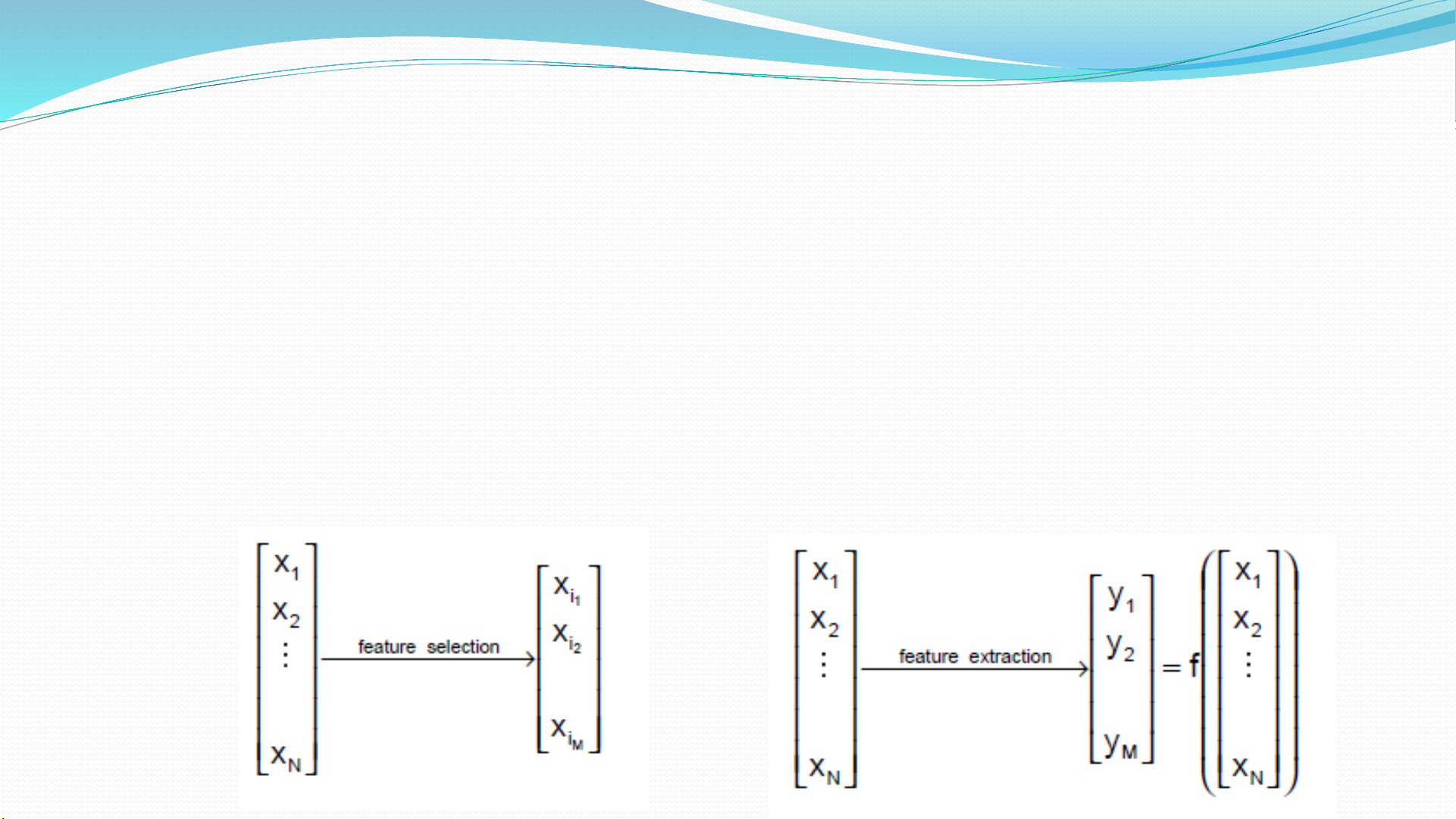

Dimensionality reduction techniques: feature extraction v.s feature selection

feature extraction: given N features (set X), extract M new features (set Y) by linear or non-

linear combination of all the N features (i.e. PCA, LDA)

feature selection: choose a best subset of highly discriminant features of size M from the

available N features (i.e. Information Gain, ReliefF, Fisher Score)