Linear regression (review)

Overview

1. Introduction

2. Application

3. EDA

4. Learning Process

5. Bias-Variance Tradeoff

6. Regression (review)

7. Classification

8. Validation

9. Regularisation

10. Clustering

11. Evaluation

12. Deployment

13. Ethics

Lecture outline

- The regression model formulation

- Understanding the regression results

- Potential problems in regression model (and its training data)

The linear regression formulation

Approximated by

By minimising

An example…

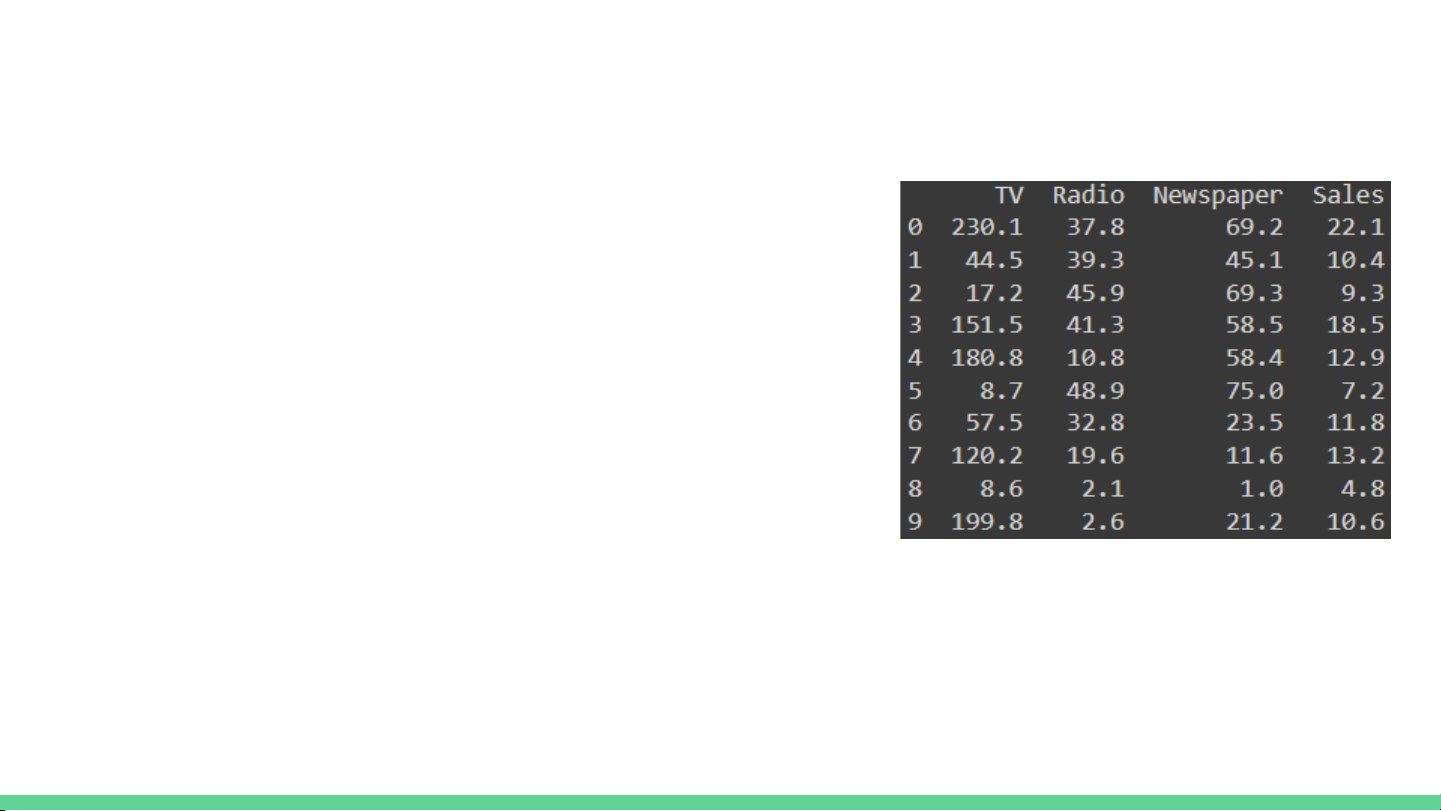

The ‘Advertising’ dataset

- Sales in 200 different markets together with

budget spent on marketing on 3 media types,

TV, Radio and Newspaper.

-Unit of Sales is in ‘thousand units’

-Unit of market budget is in ‘thousand dollars’

We have been given a regression model of Sales

on TV, Radio and Newspaper…

![SQL: Ngôn Ngữ Truy Vấn Cấu Trúc và DDL, DML, DCL [Hướng Dẫn Chi Tiết]](https://cdn.tailieu.vn/images/document/thumbnail/2025/20250812/kexauxi10/135x160/13401767990844.jpg)

![Hệ Thống Cơ Sở Dữ Liệu: Khái Niệm và Kiến Trúc [Chuẩn SEO]](https://cdn.tailieu.vn/images/document/thumbnail/2025/20250812/kexauxi10/135x160/89781767990844.jpg)

![Hệ Cơ Sở Dữ Liệu: Tổng Quan, Thiết Kế, Ứng Dụng [A-Z Mới Nhất]](https://cdn.tailieu.vn/images/document/thumbnail/2025/20250812/kexauxi10/135x160/61361767990844.jpg)