Gradient Descent

Hoàng Nam Dũng

Khoa Toán - Cơ - Tin học, Đại học Khoa học Tự nhiên, Đại học Quốc gia Hà Nội

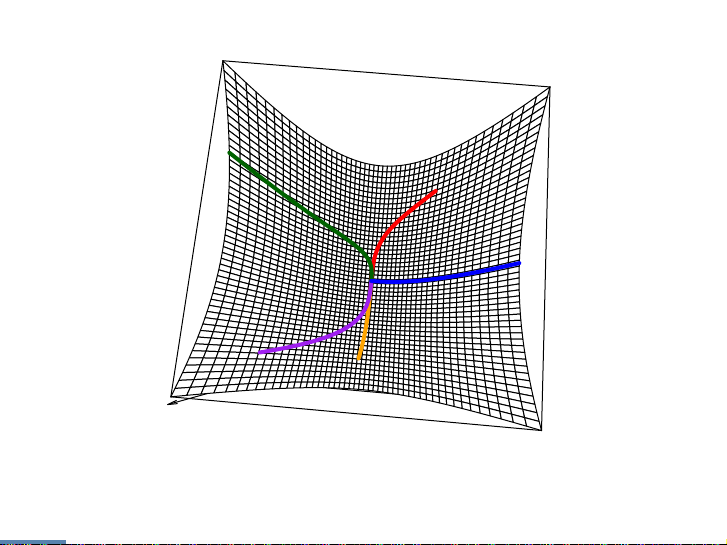

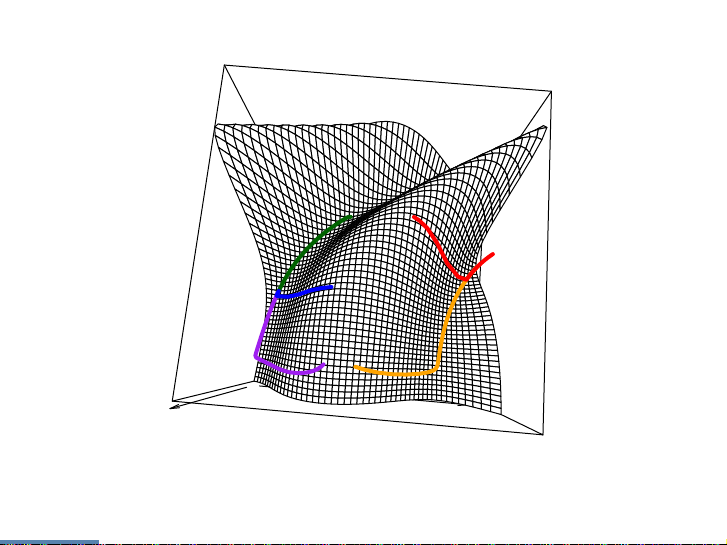

Gradient descent

Consider unconstrained, smooth convex optimization

min

xf(x)

with convex and differentiable function f:Rn→R. Denote the optimal

value by f∗= minxf(x)and a solution by x∗.

1

Gradient descent

Consider unconstrained, smooth convex optimization

min

xf(x)

with convex and differentiable function f:Rn→R. Denote the optimal

value by f∗= minxf(x)and a solution by x∗.

Gradient descent: choose initial point x(0)∈Rn, repeat:

x(k)=x(k−1)−tk· ∇f(x(k−1)),k=1,2,3, . . .

Stop at some point.

1

●

●

●

●

●

2

●

●

●

●

●

3

![Đề cương bài giảng Các phép toán tối ưu [mới nhất]](https://cdn.tailieu.vn/images/document/thumbnail/2021/20210303/gaocaolon10/135x160/3121614754463.jpg)

![Quyển ghi Xác suất và Thống kê [chuẩn nhất]](https://cdn.tailieu.vn/images/document/thumbnail/2025/20251030/anh26012006/135x160/68811762164229.jpg)