Subgradients

Hoàng Nam Dũng

Khoa Toán - Cơ - Tin học, Đại học Khoa học Tự nhiên, Đại học Quốc gia Hà Nội

Last time: gradient descent

Consider the problem

min

xf(x)

for fconvex and differentiable, dom(f) = Rn.

Gradient descent: choose initial x(0)∈Rn, repeat

x(k)=x(k−1)−tk· ∇f(x(k−1)),k=1,2,3, . . .

Step sizes tkchosen to be fixed and small, or by backtracking line

search

If ∇fLipschitz, gradient descent has convergence rate O(1/ε)

Downsides:

◮Requires fdifferentiable

◮Can be slow to converge

1

Outline

Today:

◮Subgradients

◮Examples

◮Properties

◮Optimality characterizations

2

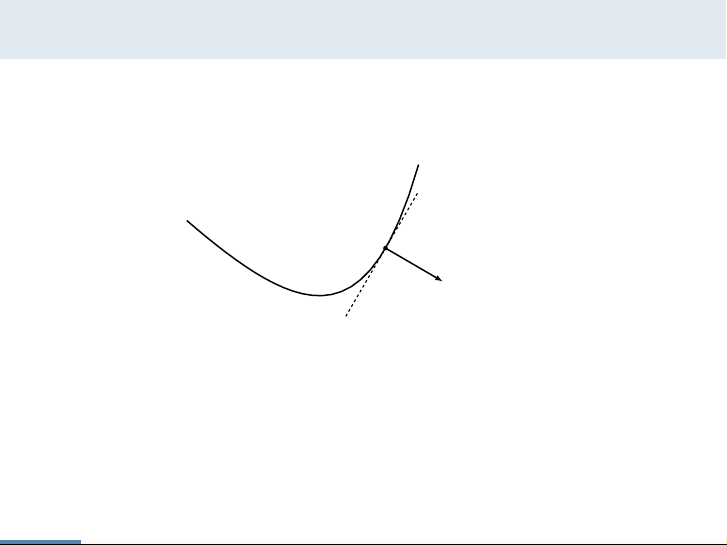

Basic inequality

Recall that for convex and differentiable f,

f(y)≥f(x) + ∇f(x)T(y−x),∀x,y∈dom(f).

(x, f(x))

∇f(x)

−1

◮The first-order approximation of fat xis a global lower

bound.

◮∇fdefines a non-vertical supporting hyperplane to epi(f)at

(x,f(x))

∇f−1 y

t!− x

f(x)!!≤0,∀(y,t)∈epi(f).3

Subgradients

Asubgradient of a convex function fat xis any g∈Rnsuch that

f(y)≥f(x) + gT(y−x),∀y∈dom(f).

◮Always exists (on the relative interior of dom(f))

◮If fdifferentiable at x, then g=∇f(x)uniquely

◮Same definition works for nonconvex f(however, subgradients

need not exist).

4

![Đề cương bài giảng Các phép toán tối ưu [mới nhất]](https://cdn.tailieu.vn/images/document/thumbnail/2021/20210303/gaocaolon10/135x160/3121614754463.jpg)

![Quyển ghi Xác suất và Thống kê [chuẩn nhất]](https://cdn.tailieu.vn/images/document/thumbnail/2025/20251030/anh26012006/135x160/68811762164229.jpg)